Introduction – What is Gemma?

Hey there, fellow tech enthusiasts! Today, we’re diving headfirst into the exciting world of AI with a new player on the scene: Gemma. Now, before you roll your eyes and think, “Oh great, another AI model,” hear us out. Gemma isn’t your run-of-the-mill AI; she’s like the cool kid who just rolled into town on a skateboard, turning heads and stealing the spotlight.

A Family Of Lightweight Open Source AI Models

So, what’s the deal with Gemma?

Well, picture this: Gemma is a family of lightweight, state-of-the-art open models, born from the minds of the geniuses at Google DeepMind and other teams across Google. Inspired by the shining star that is Gemini, Gemma is here to shake things up and inject some pizzazz into the AI world. And just like her name suggests (it means “precious stone” in Latin), Gemma is one gem of a model.

But wait, there’s more!

Alongside Gemma’s dazzling model weights, Google is throwing in some extra goodies to sweeten the deal. We’re talking about tools to support developer innovation, foster collaboration, and guide responsible use of Gemma models. Because let’s face it, we all need a little help navigating the wild world of AI, right?

And guess what? Gemma isn’t just for the tech elite. Nope, she’s available to everyone, starting today. Whether you’re a coding whiz or a total newbie, Gemma has something for you. We’re talking model weights in two sizes, a Responsible Generative AI Toolkit (try saying that five times fast), and toolchains for inference and supervised fine-tuning across all major frameworks. Phew, that’s a mouthful!

But wait, there’s more!

Gemma isn’t just a one-trick pony. Oh no, she’s versatile. You can fine-tune Gemma models to suit your specific needs, whether you’re into summarization or retrieval-augmented generation. Plus, she plays nice with all your favorite tools and systems, from PyTorch to JAX to Hugging Face Transformers. Talk about a team player!

How To Use?

Google has provided complete documentation for using Gemma Models. You can find them here. Starting small? No problemo! Grab the 2B parameter size for a lighter load on your resources. It’s like the compact car of AI models—small, efficient, and perfect for cruising around on your mobile or laptop.

Feeling a bit more adventurous? Go big with the 7B size, the SUV of AI models! With more horsepower under the hood, this bad boy is ready to tackle heavier tasks on your desktop or small server.

But wait, there’s more! With Gemma, you’re not tied down to just one platform. Thanks to the Keras 3.0 multi-backed feature, you can run these models on TensorFlow, JAX, PyTorch, or even native JAX and PyTorch implementations. It’s like having a Swiss Army knife for your AI needs!

And guess what? Getting your hands on Gemma is as easy as pie—just head over to Kaggle Models and start downloading!

Now, let’s talk tuning. No, not guitar tuning—model tuning! You can customize Gemma’s behavior to suit your specific needs, making it as sharp as a tailored suit. But be warned, tinkering with the settings might make Gemma a pro at one thing while becoming a bit rusty at others. Choose wisely between the “Pretrained” and “Instruction tuned” versions, depending on whether you want a raw talent or a conversational genius.

So there you have it, folks! Gemma—your versatile, customizable, and oh-so-smart AI companion, ready to tackle any task you throw its way. Get yours today and join the AI revolution! 🚀

Benchmarks

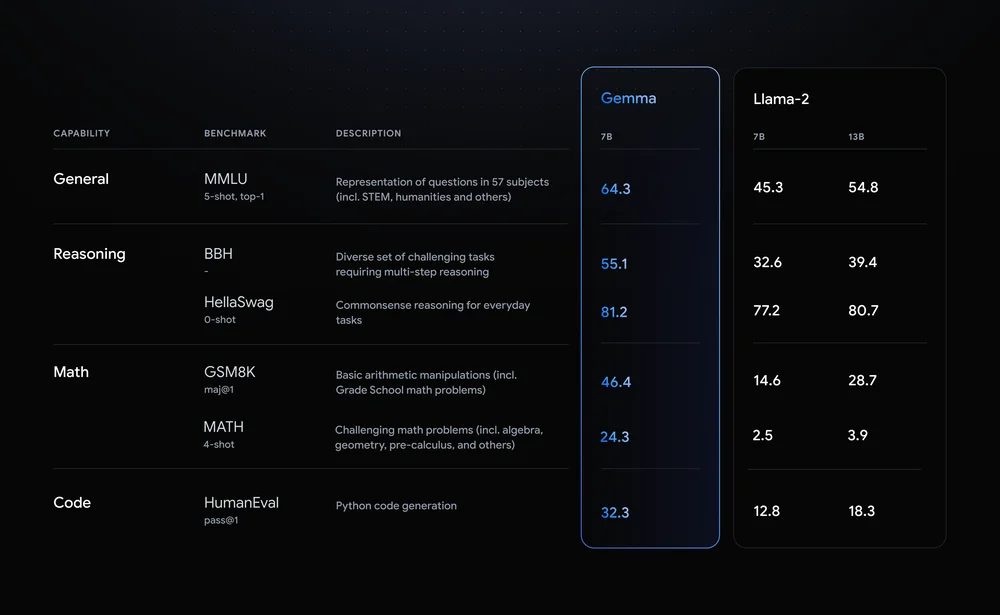

You know what’s cool about Gemma? She’s not just flying solo; she’s got some serious backup from her big sister, Gemini. Yeah, that’s right. Gemma shares all the technical and infrastructure goodies with Gemini, google’s top-of-the-line AI model that’s turning heads left and right. And you know what that means? It means Gemma 2B and 7B are punching way above their weight class when it comes to performance compared to other open models out there.

But here’s the kicker: Gemma doesn’t need a fancy-pants supercomputer to do her thing. Nope, she’s perfectly happy running on your everyday developer laptop or desktop. And get this: despite her smaller size, Gemma outshines those big, bulky models on all the important stuff. We’re talking key benchmarks, people. And the best part? She does it all while playing by the rules and sticking to our strict standards for safety and responsibility. Now that’s what I call a rockstar AI!

Responsible AI

And here’s the best part: Gemma isn’t just about performance; she’s about responsibility too. That’s right, Google has gone above and beyond to ensure that Gemma models are safe, reliable, and free from any funny business. We’re talking automated techniques to filter out sensitive data, extensive fine-tuning, and reinforcement learning from human feedback. Because let’s face it, we’ve all seen enough rogue AIs in the movies to know that we don’t want that in real life.

So, what are you waiting for? Dive into the wonderful world of Gemma and see for yourself what all the fuss is about. Trust us, you won’t be disappointed. Gemma: the AI rockstar you never knew you needed. Read more about LLMs here.